Robots that look like people might seem futuristic, but they come with more questions than answers. As these machines begin to mirror our faces, voices, and mannerisms, concerns grow. Realism can blur lines and challenge ethics. So, let’s break down ten key issues that emerge when machines start acting—and looking—a little too human.

Threat To Human Identity

Technology shapes how we view ourselves, and such robots raise worries about losing a sense of individuality. Humans might feel less valued if machines can perfectly mimic emotions and expressions. The difference between artificial and human life should be clear to preserve self-worth and confidence.

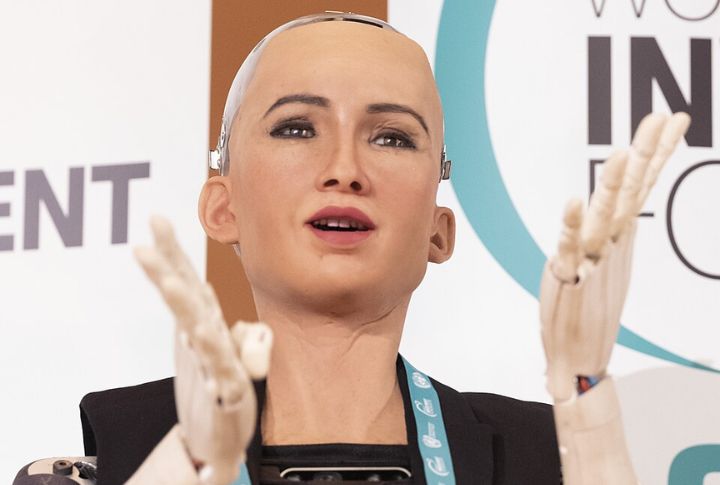

Emotional Manipulation

Lifelike machines can inspire trust more easily than expected. Their realistic expressions often prompt emotional responses, even though no real feelings are present. This effect has potential risks, especially in marketing or social settings, where emotional connection might be used to influence behavior.

Deception And Trust Issues

Hyper-realistic robots blur the line between human and machine. If users cannot tell the difference, ethical concerns arise. The possibility of deception grows when artificial beings engage in conversations or transactions. It is important that people are always clearly informed when they are interacting with programmed intelligence instead of a real person.

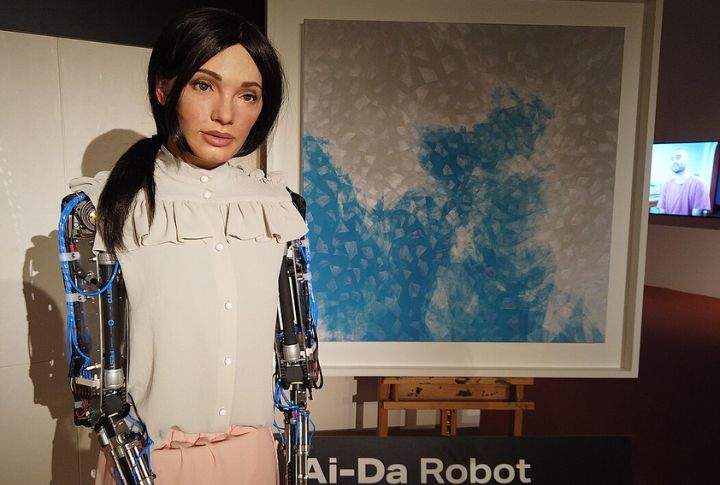

Uncanny Valley Discomfort

Some robots look so realistic that they create discomfort, a phenomenon called the uncanny valley. Rather than easing interactions, these lifelike machines often cause emotional distance. While their visual realism is impressive, it can reduce user acceptance and make people feel uneasy.

Job Displacement

Such machines could take over jobs that require empathy, such as caregiving and customer service. This shift could weaken emotional connections and make honest conversations less meaningful. However, studies show that human preference for emotional connections with other humans often limits the replacement of workers in these roles.

Ethical Concerns In Companionship

Humanlike robots are being marketed as companions, especially for the elderly and isolated individuals. While they may reduce loneliness, they also raise ethical questions about replacing authentic human relationships. Critics argue that emotional dependency on machines may diminish real human bonds and mental well-being over time.

Consent And Privacy Risks

Humanlike robots often collect information during interactions, and people may share personal details without realizing it. When users mistake a bot for a person, concerns become more serious. To build trust, companies should protect user data and define how information is collected and used.

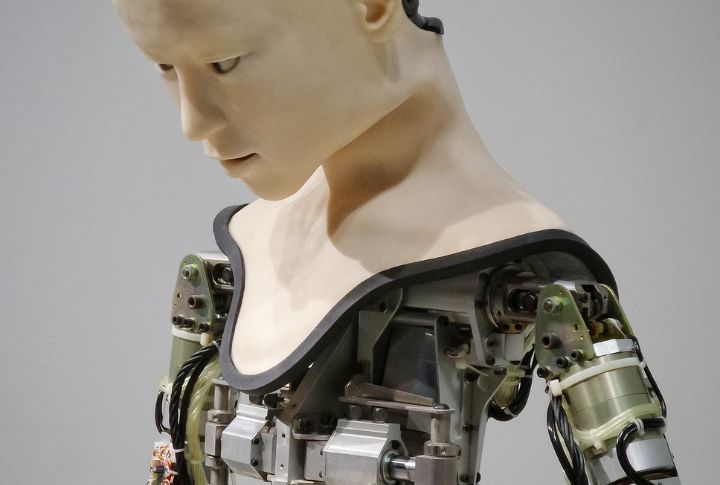

Durability Issues

Some synthetic materials used in lifelike robots can wear out quickly, though research into durable options, like self-healing skin, is ongoing. Flexible skin and interactive features can break down over time and are costly to replace. In the long run, a durable yet efficient machine is more dependable than one built mainly for looks.

High Maintenance Costs

Hyper-realistic robots often come with intricate engineering that demands constant calibration and care. Their delicate parts and advanced systems are prone to wear and malfunction, driving up maintenance costs. In contrast, basic robots with simpler mechanics tend to last longer and cost significantly less to upkeep.

Security Vulnerabilities

Hyper-realistic robots use artificial intelligence to mimic human behavior. They are vulnerable to cyberattacks and hackers who spread false information or steal personal data. Cybersecurity should be a priority so these humanlike machines can be protected against digital threats.